Data Lake Service¶

1. Introduction¶

Data Lake Service (DLS) is a data storage, cataloging and search service. It provides a set of APIs to upload and download files from TCUP Data Lake along with metadata. DLS provides API to search files based on file meta-data, share files across various DLS users and link a file to another file via user defined relationships.

The uploaded files are stored in a distributed file systems within TCUP cloud. Data Lake can be utilized to store any type and size of data files. These include sensor logs, application log files, images, binary files, analytics output files, machine learning models, device firmware packages etc. The service can be used by data scientists, applications, other TCUP services and any other interested clients.

Data Lake service can also be used in a mode where the files are stored outside of TCUP say in external file systems, cloud storage, databases etc. In such cases TCUP Data Lake Service only stores the meta data and provides search, linking, retrieval and cataloging service.

In recent release of DLS, a new capability has been added to define data point entities corresponding to FAIR data principles. DLS can be broadly classified into two different and mutually independent types of tasks –

core DLS tasks

data point tasks

The DLS tasks are again broadly of four categories -

File management - It includes file upload, overwrite, append, archive, download and delete.

Cataloging - This allows to search files, file lineage, metadata, organization, directory hierarchy and statistics of usage. File search has a wide range of filter parameters to provide flexibility to the users.

Access control - This includes tenant and user management, file sharing, directory-based permissions management, setting up organizational positions and storage limit control.

Metadata management - It includes metadata governance by defining metadata schema and directory rule. It also helps to associate metadata with files. Linking two files

Data point tasks contain APIs to manage Repository, Catalog, Dataset, Distribution. It also contains APIs for provenance and permission control.

1.1 Intended Audience¶

The intended Audience of this document is anyone who wants to have an overview of TCUP Data Lake Service. After reading this document user will understand the capability of TCUP Data Lake Service as an IoT platform.

2. Key Concepts¶

In order to use the Data Lake Service, a user needs to understand some of the basic concepts of DLS. Please refer to the following section for the concepts:

2.1 Catalog¶

Catalog is a read-only view of the user’s account in DLS in various perspective. User can get a list of files based on various filter parameters like file name, extension, upload time, size, metadata etc. This is called a file catalog. It shows detail about the file including it’s download URI. Another variant of file catalog is directory catalog, where the content of file catalog is displayed in a virtual directory hierarchy. In another scenario, user can fetch the lineage data of a particular file. Lineage is a brief history of operations on a specific file. There are few other APIs which simply provides the list of file URIs in DLS or the list of metadata keys existing in DLS or list of relation names used in DLS.

2.2 File¶

DLS can store any type of file and is completely agnostics of the file content. For a particular DLS user, the file must be uniquely named before storing in DLS. There is a limit on the maximum size of the file that can be uploaded in DLS; by default, it is 5GB. The limit can be increased or decreased by changing DLS configuration. Files can be physically deleted from storage but DLS keeps the file related information in database. Each file successfully uploaded in DLS, will have a unique file-uri.

In the following conditions though, files with same name can be added in DLS by a particular DLS user -

files having same name but having different savepoint

files of same name with different directories

duplicate file uploaded in DLS with either APPEND, ARCHIVE or OVERWRITE mode

file is deleted and uploaded once again with same name

2.3 Out-of-band File¶

Files stored in external locations, can also be registered in DLS as out-of-band files. Instead of file-name, these files are identified by a fully qualified URL pointing to the external location. To register an out-of-band file, only the file URL is to be sent to the DLS file upload API. Although, out-of-band files are stored/retrieved externally by the clients, DLS can still maintain file catalog and other operation on the file. The out-of-band file URL has to be absolutely unique in the context of DLS. This means that the same out-of-band URL can not be used by any two users from same or different tenants. This ensures that same external file is never referenced simultaneously.

2.4 Metadata¶

Meta-data is a key value pair that is used to describe related information about a file. By default, metadata key and value is added in ad-hoc manner by the user. When ad-hoc metadata is added for a file, the datatype of the metadata value is always assumed to be text. Sometimes, metadata can also be mentioned for the directory, which is used as directory rule.

2.5 Metadata Schema¶

Metadata schema can be defined by DLS admin to disallow users to add ad-hoc metadata. Metadata schema is tenant specific and when it is defined, all users of the tenant becomes schematic to follow the metadata schema. By default, all tenants are non-schematic. There could also be some tenants where metadata schema is defined but still the admin allows users to add ad-hoc metadata.

2.6 Directory¶

Directory can be independently registered in DLS by DLS admins. One or more files may be associated with a directory. Directory is an optional concept in DLS and it may not be at all required for a specific use case. It may be noted that files are not actually stored in DLS managed storages as per the directories mentioned. Directory provides a virtuq al hierarchy of files visible to users. The update directory API for files may be used to virtually move (cut-paste) a file from one directory to other. DLS provides directory based catalog API to render directory hierarchy and associated files. Directory can be deleted provided there is no file associated with it.

2.7 Directory Permission¶

Access control permissions given on the directory are meant for files associated with it. Directories may have sub-directories. Permission has to be explicitly mentioned for each directory depth. It would not be automatically inherited from parent or child directories.

2.8 Directory Rule¶

Directory may also be used to set up meta-data rule for the files. When directory-based metadata rule is set, it allows admins to ensure that DLS users are providing mandatory set of metadata while uploading files associated with the directory.

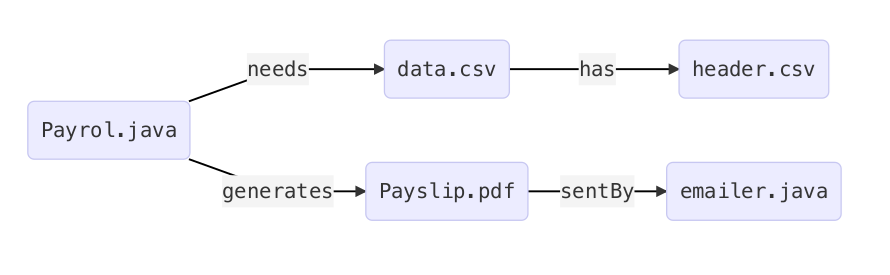

2.9 Link¶

Link is a special metadata of file, which is used to record relation between two existing files of DLS. Link can be created and fetched in various use cases where the two files may be a program file and data file pair, an input and output file pair, the raw file and processed file pair, even two split files of main file and so on. Same relation name can be used in multiple links as well as two files can have more than one relation names. Link helps the user to generate an unbounded graph of files and relation names, e.g -

2.10 Users¶

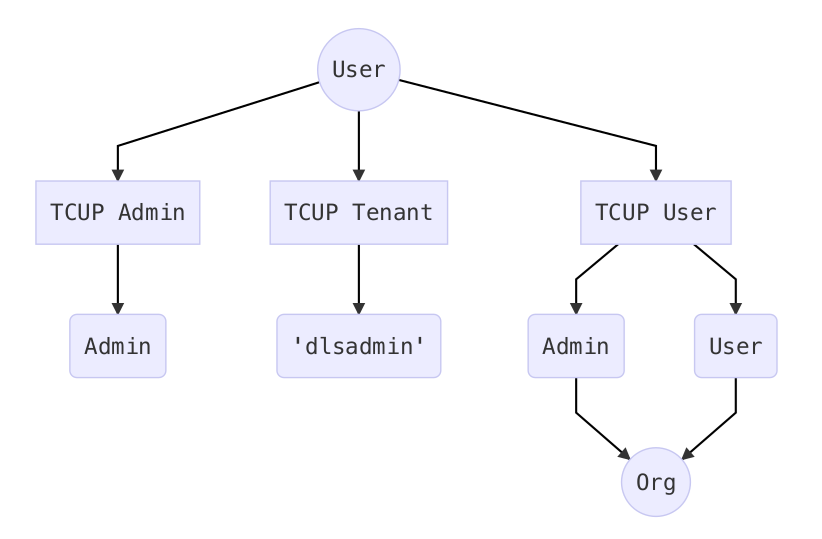

Below diagram shows the users and roles in DLS. Entities like TCUP admin, TCUP tenant and TCUP user are required to be individually provisioned in DLS for proper working. TCUP Admin is mapped as an admin in DLS which has privileges to invoke administrative APIs. Provisioning TCUP tenant in DLS will automatically provision a tenant-level DLS admin user called dlsadmin . All other TCUP users would be directly mapped to users in DLS. In case, organization structure is enforced in DLS, TCUP users can further be provisioned either as Organization Admin or Organization Users at various levels of organization position.

Storage Type |

Category |

Use Case Examples |

Hadoop (TCUP managed HDFS) |

Internal |

In-premises or private cloud DLS installation |

DLS Local File System (NFS mount) |

Internal |

Cost-effective alternative of HDFS storage |

Azure HDInsight / AWS EMR |

Internal |

Large installations |

Azure Blob Store / AWS S3 |

External |

Cost effective alternative of managed Hadoop |

Client’s Local File System |

External |

Large files having restriction in movements |

2.11 Basic Access Control¶

In DLS, files are logically isolated for each users of a tenant. This means, files uploaded by one user of DLS, are not accessible by others. There is, however, a file share API using which user can enable others to read (download) his file. In Data Lake Service, there is absolutely no way to access files from users of different tenants. In this basic access control, the uploader of the file, is considered the owner and can perform operations like download, overwrite, archive, delete, share etc on the file. The single default administrator named dlsadmin does not have any special privilege to access other users’ files unless explicitly shared by those users.

2.12 Directory-based Access Control¶

In some scenario, users of same tenant may require to work on a common set of files. The concept of directory helps to achieve that. A directory is not just a metadata of a file, but it also serves as an access control measure for all the files attached to the directory. So, directory can also be considered as project or workspace where multiple users have various levels of access. When an “administrator” define a directory and allows various users (off course from same tenant only) to access (read, write or delete) files from the directory, it becomes a way to allow multiple users to work on a common set of files. If a user has read (R) access on a directory, it can only download files from the directory. Whereas, having write (W) access will allow the user to upload new files to be associated with that directory. Delete (D) would allow user to delete a file. It may be noted that irrespective of whoever uploads the file, administrator can grant or revoke R, W and D access from users. In contrast to basic access control, only administrator is the “owner” of the directory as well as of all files associated with that directory. So, by default, he can perform all operations on the file. One directory can have many users and one user can have access to many directories. Only administrator has access to create, modify or delete directory and permissions. Once a file is under directory-based access control, it can not shared to other users as per the regular share API. An existing file in DLS can be moved from basic access control to directory-based access control and vice versa.

2.13 Organizational Access Control¶

In some scenario, users can be part of an existing organization structure. So the administrators and users come from various position of the organization. The above two access control models are not sufficient in that case because the users are part of an organization which has various hierarchical positions with varying roles. Here tenant is not a human who tries to access DLS API rather tenant is a logical group of many users. The organization may also have more than one tenantship in DLS. Each organization have at least one or more named (not the de facto dlsadmin as above) administrators mapped to DLS admins. These admins are top level admins of the organization. They have full access to all files uploaded in DLS by all other admins and users hierarchically below in the organization. Now, based on the organizational structure there can be other admins lower down the organizational hierarchy. These admins and users can be created and marked with their organization_position accordingly. While a user is given permission on a directory, all admins hierarchically above him would inherit full access on the directory as well. This behavior can be overwritten by creating specific permission for the admin on that directory. It is to be noted that access privilege can be granted by higher level admins only. This organizational access control extends the directory-based access control but provides additional visibility to the admins about the directories, files and permissions in the organization. This helps the file management more administrator driven and aligned to organization’s existing hierarchy.

Which access control model to be used when?

Basic access control model is the default model which is to be used when there is no or only occasional read-only of sharing of files among various users of DLS.

Directory-based access control allows easy sharing of files as well creation of workspace or project models.

Organizational access control is more advanced options when there are requirements of administrative visibility to the files of various users of an organization.

2.14 Data Point¶

DLS can be used to categorize the organizational data into repositories, catalogs, datasets and distributions. Each one of them is called data points and has many standard and custom metadata to describe the datapoint.

3. Functional Capabilities¶

Data Lake service provides the following functional capabilities:

File – Any file with specified size-limit is acceptable. File-name has to be unique for a DLS user.

Catalog Search – A DLS catalog can be searched using free-text search.

File Share – A file when shared to other DLS user, is visible to other DLS user’s catalogue. Hence it can be downloaded by other DLS user.

Upload multiple files in single API call

Upload file and file meta-data

Update the meta-data set of existing file

Upload the path of external files in TCUP DLS

View file catalog

View file catalog in a directory based hierarchy

Get file URI, meta-data, file transfer status, file sharing status, linked files etc

Filter file catalog using free text search

Download file using file-URI

Link two files with a relation name

Delete an existing link

Share files with other DLS users

Revoke shared files from other DLS users

Search shared files

View shared files in catalog

Create/search DLS users (admin privilege needed)

Replicate source directory in DLS

Archive existing file using API

Create directory

Give permissions to add, delete, read from directory

Upload files to a directory

Create metadata schema

Create rule for metadata while uploading file to directory

Lock or unlock a file in a directory

Move file from one directory to other

Upload multiple files

Upload multiple files and store as a compressed bundle in DLS

Retrieve file lineage data

Get statistics data

Following are various datapoint tasks

Manage repository, catalog, dataset and distribution

Search for any datapoint

Create permission for datapoint

Access provenance records

4. Purpose/Usage¶

Data Lake Service is introduced to leverage the already available distributed file system in TCUP cloud, for the benefit of TCUP users by allowing to store arbitrary big files.

The files stored in Data Lake can be of various usages. For example, data scientists can use DLS to store data files, program files. It can also be used by other TCUP services to store intermediate files. The files stored in Data Lake is well organized and protected by access control. Every file is visible in DLS catalog and can be further filtered using free-text search. Files can be downloaded from DLS using API.

Data Lake service is a low-level service, where most of the attributes are user-defined. A higher-level service or application can create specific use cases to leverage the benefits of Data Lake.

DLS can also be used to categorize the organizational data into repositories, catalogs, datasets and distributions. Each one of them is called data points and has many standard and custom metadata to describe the datapoint.

5. Examples¶

Data Lake Service can be used in many real life Industrial IoT use cases.

Consider a case where flight data from a flight data recorder of an aircraft needs to be analyzed for identifying opportunities for improving efficiency of fuel consumption.

The flight data records are packaged in binary files and are read in from external file servers. A directory watcher program is developed to watch for any new files generated. Whenever a new file is detected, the program calls the TCUP DLS APIs and puts the raw file in the TCUP Data Lake along with necessary metadata. In this case, the metadata includes information about the flight operator, date and time of flight, aircraft tail number, airport code, destination code etc. All this information is recorded as TCUP DLS metadata attached to the said file.

A TCUP Task Service task is scheduled to run every 30 minutes. It scans the DLS for any new raw flight data file and it parses the file for all data fields that are relevant for fuel efficiency. It then generates a new file and puts it back to the data lake. The task also adds additional metadata for this intermediate file and also creates a link between the original raw file and the intermediate file. Finally, another TCUP task processes the file and generates the final output. The final output is also written back to the data lake. All the files are linked together in the DLS by relationship graphs.

6. Reference Document¶

Please refer to the following documents for more details about this service:

API Guide